Introduction

Faced with the enormous and evergrowing amounts of data being generated in the world today, software architects need to pay special attention to the scalability of their solutions. They must also design systems that can, when needed, handle many thousands of concurrent users. It’s not easy, but designing for massive scalability is an absolute necessity.

Software architects have several options for designing scalable systems. They can scale vertically by using bigger machines with dozens of cores. They can use data distribution (replication) techniques to scale horizontally for increasing numbers of users. And they can scale data volume horizontally through the use of a data partitioning strategy. In practice, software architects will employ several of these techniques, trading off hardware costs, code complexity, and ease of deployment to suit their particular needs.

This paper will discuss how InterSystems IRIS Data Platform™ supports vertical scalability and horizontal scalability of both user and data volumes. It will outline several options for distributing and partitioning data and/or user volume, giving scenarios in which each option would be particularly useful. Finally, this paper will talk about how InterSystems IRIS helps simplify the configuration and provisioning of distributed systems.

Vertical Scaling

Perhaps the simplest way to scale is to do so “vertically” – deploy on a bigger machine with more CPUs and memory. InterSystems IRIS supports parallel processing of SQL and includes technology for optimizing the use of CPUs in multi-core machines.

However, there are practical limits to what can be achieved through vertical scaling alone. For one thing, even the largest available machine may not be able to handle the enormous data volumes and workloads required by modern applications. Also, “big iron” can be prohibitively expensive. Many organizations find it more cost-effective to buy, say, four 16-core servers than one 64-core machine.

Capacity planning for single-server architectures can be difficult, especially for solutions that are likely to have widely varying workloads. Having the ability to handle peak loads may result in wasteful underutilization during off hours. On the other hand, having too few cores may cause performance to slow to a crawl during periods of high usage. In addition, increasing the capacity of a single server architecture implies buying an entire new machine. Adding capacity “on the fly” is impossible.

In short, although it is important for software to leverage the full potential of the hardware on which it is deployed, vertical scalability alone is not enough to meet all but the most static workloads.

Horizontal Scaling

For all of the reasons given above, most organizations seeking massive scalability will deploy on networked systems, scaling workloads and/or data volumes “horizontally” by distributing the work across multiple servers. Typically, each server in the network will be an affordable machine, but larger servers may be used if needed to take advantage of vertical scalability as well.

Software architects will recognize that no two workloads are the same. Some modern applications may be accessed by hundreds of thousands of users concurrently, racking up very high numbers of small transaction per second. Others may only have a handful of users, but query petabytes worth of data. Both are very demanding workloads, but they require different approaches to scalability. We will start by considering each scenario separately.

Horizontal Scaling of User Volume

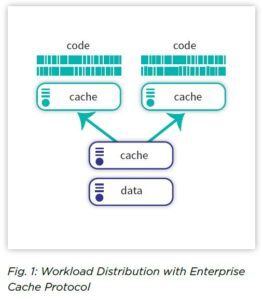

To support a huge number of concurrent users (or transactions), InterSystems IRIS relies on unique data caching technology called Enterprise Cache Protocol (ECP).

Within a network of servers, one will be configured as the data server where data is persisted. The others will be configured as application servers. Each application server runs an instance of InterSystems IRIS and presents data to the application as though it were a local database. Data is not persisted on the application servers. They are there to provide cache and CPU processing power.

User sessions are distributed among the application servers, typically through a load balancer, and queries are satisfied from the local application server cache, if possible. Application servers will only retrieve data from the data server if necessary. InterSystems IRIS automatically synchronizes data between all cluster participants.

With the compute work taken care of by the application servers, the data server can be dedicated mostly to persisting transaction outcomes. Application servers can easily be added to, or removed from, the cluster as workloads vary. For example, in a retail use case, you may want to add a few application servers to deal with the exceptional load of Black Friday and switch them off again after the holiday season has finished.

Application servers are most useful for applications where large numbers of transactions must be performed, but each transaction only affects a relatively small portion of the entire data set. Deployments that use application servers with ECP have been shown to support many thousands of concurrent users in a variety of industries.

Horizontal Scaling of Data Volume

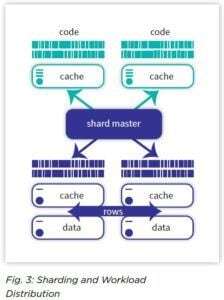

When queries – usually analytic queries – must access a large amount of data, the “working dataset” that needs to be cached in order to support the query workload efficiently may exceed the memory capacity on a single machine. InterSystems IRIS provides a capability called sharding, which physically partitions large database tables across multiple server instances. Applications still access a single logical table on an instance designated as the shard master. The shard master decomposes incoming queries and sends them to the shard servers, each of which holds a distinct portion of the table data and associated indices. The shard servers process the shard-local queries in parallel, and send their results back to the shard server for aggregation.

Data is partitioned among shard servers according to a shard key, which can be automatically managed by the system, or defined by the software architect based on selected table columns. Through the careful selection of shard keys, tables that are often joined can be co-sharded, so rows from those tables that would typically be joined together are stored on the same shard server, enabling the join to happen entirely local to each shard server, and thus maximizing parallelization and performance.

As data volumes grow, additional shards can easily be added. Sharding is completely transparent to the application and to users.

Not all tables need to be sharded. For example, in analytics applications, facts tables (e.g. orders in a retail scenario) are usually very large, and will be sharded. The much smaller dimensions tables (e.g. product, point of sale, etc.) will not be. Non-sharded tables are persisted on the shard master. If a query requires joins between sharded and non-sharded tables, or if data from two different shards must be joined, InterSystems IRIS uses a highly efficient ECP-based mechanism to correctly and efficiently satisfy the request. In these cases, InterSystems IRIS will only share between shards the rows that are needed, rather than broadcasting entire tables over the network, as many other technologies would. InterSystems IRIS transparently improves the efficiency and performance of big data query workloads through sharding, without limiting the types of queries that can be satisfied.

The InterSystems IRIS architecture enables complex multi-table joins when querying distributed, partitioned data sets without requiring co-sharding, without replicating data, and without requiring entire tables to be broadcast across networks.

Scaling Both User and Data Volumes

Many modern solutions must simultaneously support both a high transaction rate (user volume) and analytics on large volumes of data. One example: a private wealth management application that provides dashboards summarizing clients’ portfolios and risk, in real time based on current market data.

InterSystems IRIS enables such Hybrid Transactional and Analytical Processing (HTAP) applications by allowing application servers and sharding to be used in combination. Application servers can be added to the architecture pictured in Figure #2 to distribute the workload on the shard master. Workloads and data volumes can be scaled independently of each other, depending on the needs of the application.

When applications require the ultimate in scalability (for example, if a predictive model must score every record in a large table while new records are being ingested and queried at the same time) each individual data shard can act as the data server in an ECP model. We refer to the application servers that share the workloads on data shards as “query shards.” This, combined with the transparent mechanisms for ensuring high availability of an InterSystems IRIS cluster, provides solution architects with everything they need to satisfy their solution’s unique scalability and reliability requirements.

The comparative performance and efficiency of InterSystems IRIS’ approach to sharding has been demonstrated and documented in a benchmark test validated by a major technology analyst firm.1 In tests of an actual mission critical financial services use case, InterSystems IRIS was shown to be faster than several highly specialized databases, while requiring less hardware and accessing more data.

Flexible Deployment

InterSystems IRIS gives software developers a great deal of flexibility when it comes to designing a highly efficient, scalable solution. But scalability may come at the cost of increased complexity, as additional servers, taking on a variety of roles, are added to the architecture. Simplify the provisioning and deployment of servers (whether physical or virtual) in a distributed architecture with InterSystems IRIS.

InterSystems enables simple scripts to be used for configuring InterSystems IRIS containers as a data server, shard server, shard master, application server, etc. Containers can be deployed easily in public or private clouds. They are also easily decommissioned, so scalable architectures can be designed to grow or contract with fluctuating needs.

Conclusion

Massive scalability is a requirement for modern applications, particularly Hybrid Transactional and Analytical Processing applications that must handle very high workloads and data volumes simultaneously. InterSystems IRIS Data Platform gives software architects options for cost-effectively scaling their applications. It supports vertical scaling, application servers for horizontally scaling user volume, and a highly efficient approach to sharding for horizontally scaling data volume that eliminates the need for network broadcasts. All these technologies can be used independently or in combination to tailor a scalable architecture to an application’s specific requirements.

For more information about InterSystems IRIS Data Platform, visit InterSystems.com/IRIS.

About InterSystems

InterSystems is the engine behind the world’s most important applications. In healthcare, finance, government, and other sectors where lives and livelihoods are at stake, InterSystems is the power behind what matters™. Founded in 1978, InterSystems is a privately held company headquartered in Cambridge, Massachusetts (USA), with offices worldwide, and its software products are used daily by millions of people in more than 80 countries. For more information, visit InterSystems.com.

1 - InterSystems IRIS Data Platform: A Unified, Efficient Data Platform for Fast Business Insight, Kerry Dolan, Senior IT Validation Analyst, Enterprise Strategy Group, March 2018.